On the reproducibility of free energy surfaces in machine-learned collective variable spaces

Many of nature’s most interesting physical, chemical, and biological processes exist on timescales beyond the reach of atomistic simulations. A common way for practitioners to still sample these processes in simulations is to identify a small set of coordinates that capture the relevant physics and describe the progress of the process of interest, so called Collective Variables (CVs). Simulations are then accelerated along these variables which allows for the sampling of back-and-forth transitions and the construction of Free Energy Surfaces (FESs).

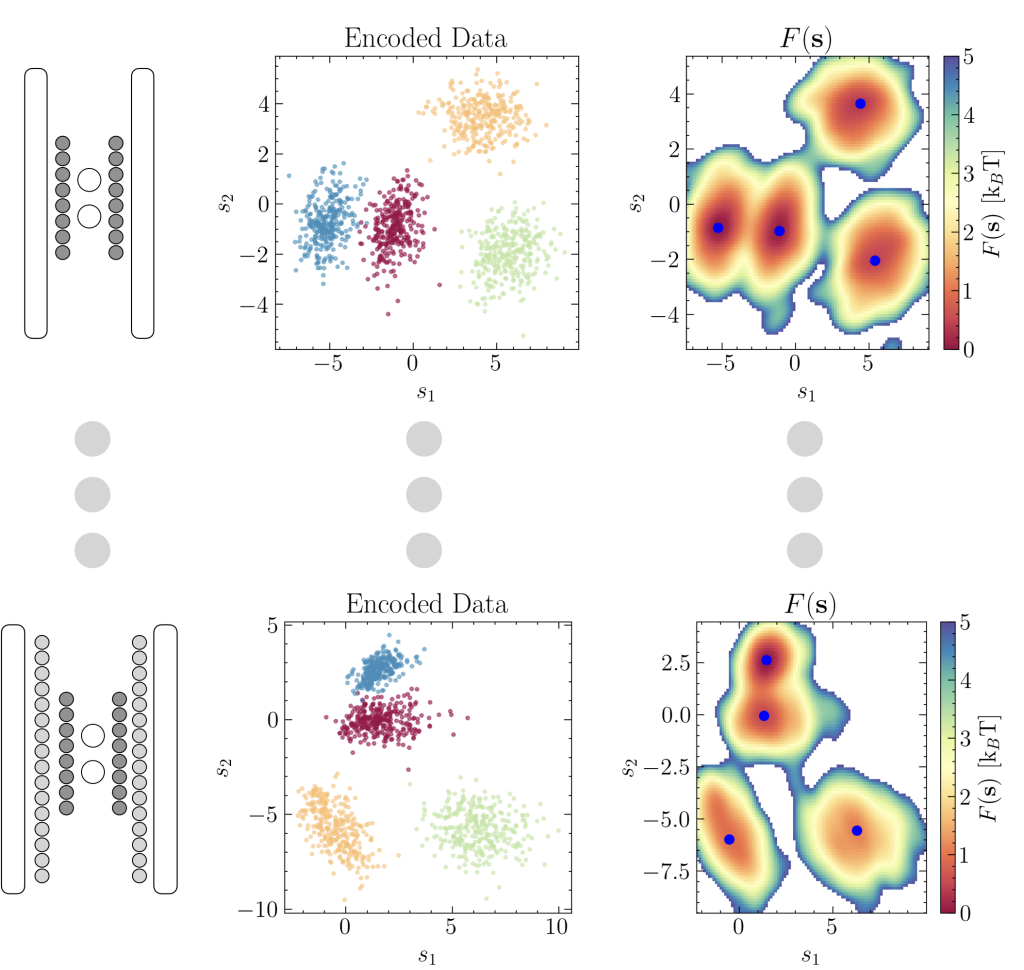

The process of identifying suitable CVs is highly non-trivial, but in recent years has been greatly aided by a vast variety of machine learning techniques to great success. However, the consequences of capturing the physics of different processes with variables that are the result of inherently statistical and partially random processes has thus far been underappreciated. In this work, we demonstrate that free energy surfaces sampled with machine-learned collective variables (MLCVs) are uniquely associated with their set of model parameters which are impossible to replicate. Further, due to their highly non-linear nature they compress physical reality in an unpredictable and unintuitive manner. As a consequence, a common assumption in the field, which is that the absolute differences between free energy minima at least correlates to the relative stability of states, no longer holds quantitatively.

We derive a surprisingly simple solution to these problems, which is to normalise the model gradients when deploying it in simulation. This is advantageous from a practical perspective as it solves many common pitfalls of MLCV deployment, such as vanishing gradients or force spikes. Further, we show that these normalised models still sample a valid representation of the free energy, so-called geometric FESs. These geometric FESs are no longer uniquely associated with a set of model parameters but instead the model architecture and the physics it represents, and they offer an easily readable and physically meaningful representation of the FES by explicitly correcting for the aforementioned phase space compression. This simple best practice ensures reproducibility and empowers many of the recently developed MLCV approaches.